Published: August 29, 2022

Machine Learning Services – Understanding Transformers & their Applications

Machine Learning Services encapsulate a huge range of products that we use daily but know very little about. One of the products that ML includes is transformers.

Now, whether you are an AI enthusiast, who just wants to know more about ML technologies, a business owner trying to understand the various applications of machine learning services and solutions, or a machine learning expert looking for information, you have come to the right place to learn about the Transformers in Machine Learning.

The Growth of AI ML Development Companies

Currently, 37% of total businesses and organizations utilize AI to their advantage. To do so, they are either hiring in-house experts for the job or getting an AI ML development company to deploy resources and improve their overall operations. Either way, the industry is expected to grow exponentially in the coming decade, and companies that are investing in technology today will benefit tomorrow.

Machine Learning Services – The Basics of Transformers

In the most basic sense, Transformers are the tools that take an input and compute it to provide an output. One primary example of technology is a translator. It takes your input in one language and then changes it per the conditions, from input to the desired output language.

So, in essence, Transformers is the process of sequential input of data, like natural language, and using the application towards tasks like translation and text summarization.

Some of similar or other techniques used for sequential data include

RNN – Recurrent Neural Networks

LSTM – Long Short-Term Memory

GRU – Gated Recurrent Units

While all three have the same purpose, they vary significantly in their infrastructure.

Now that you understand the purpose of Transformers let's go into detail about the various technologies in play.

RNN

RNNs are a type of neural network. The data is fed sequentially, in time series. RNN has a hidden cell state which contains sequential information encoded from previous states and passes the current hidden state as to the next time stamp. These were designed for a range of applications, including:

Speech Recognition

Translation

Language Modeling

Text Summarization

Image Captioning

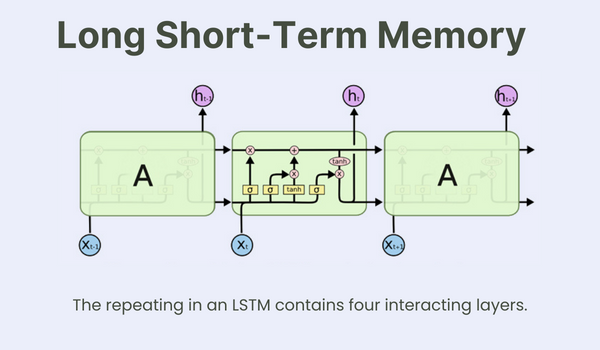

LSTM

LSTM is a special kind of RNN. The technology can learn long-term dependencies and comprises three main gates:

Forget Gate - Decides, “How much and what information from the previous timestamp should be carried to the next timestamp?”

Input Gate - Decides “What new information can the added from the current time stamp.”

Output Gate - Generates the output from the current timestamp to the next timestamp.

It can do so with the help of cell state that keeps long term dependencies while still having the hidden cell state.

GRU

GRU is essentially an advancement in standard RNN. They are more recent than LSTM and, while being similar, do have added advantages from this technology. It has no separate cell state, but it does have a hidden state.

Reset Gate

Update Gate

It is faster to train the GRU system than RNN and LSTM.

The purpose of Gates is to work as the threshold to help the network determine when to use the normal stacked layers versus the identity connection.

Understanding The Encoder-Decoder Architecture

The transformers use encoder-decoder architecture. The encoder's role is to extract features from the input sentence, and the decoder is responsible for using the features to create an output sentence.

There are several encoder blocks in a transformer, known as encoder stack, and the input goes through the multiple encoder blocks. Then, the last encoder block becomes the input feature of the decoder stack given the special token or End of Sentence (EOS).

The decoder also has several blocks known as decoder stack.

The decoder aims to understand the input and use it to draw up a conclusion.

Attention

Attention is the part of neural networks that mimic cognitive attention in humans. It helps determine the tokens or parts of the input that need more weightage and those that need less weightage.

Attention was good in keeping long-term sequences. For the previous systems, it was not possible to have inferred information.

In neural networks, attention helps mimic cognitive attention. This results in the enhancement of some parts of the input while diminishing other parts. The technique helps ensure that the network focuses on small yet essential elements of the data.

Scaled Dot-Product Attention

Multi-Head Attention

Attention came well before. The Transformer model in ML was generated through attention technology.

Math is the language of computers. Hence, it is only natural that both the systems use mathematical formulas.

Machine Learning - How Attention Works?

In the learning phase, attention works differently. You give the model sentences with a special token at the end and start with the next sentence. Attention bits are generated as per the information and the formula in play. Attention bits assign weight to every piece of information, per the learning model. The context vector is multiplied with weights, and then the output is generated through it.

In this process, we get hidden states, use the already learned weights, normalize the weights, and multiply both to get the context vector that is used to get the outputs.

Currently, one of the best examples of Transformers in use is Bert. It is a transformer model by Google. Bert stands for Bidirectional Encoder Representations from Transformers and is being trained for various purposes. Bert is an encoder-only stack. It is called bidirectional because the information is given two ways.

Bert – Google’s Transformer Model

Currently, one of the best examples of Transformers in use is Bert. It is a transformer model by Google. Bert stands for Bidirectional Encoder Representations from Transformers and is being trained for various purposes. Bert is an encoder-only stack. It is called bidirectional because the information is given two ways.

It comes in a range of variations to tackle different problems, namely, RoBERTa, ALBERT, ELECTRA, XLNet, DistilBERT, SPANBERT, and BERTSUM.

Here are the Bert Data Sets

MNLI: Multi-lingual Natural Language Interface

QQP: Question-Question Pair

SQuAD: Stanford Question Answering Dataset

At MoogleLabs, a machine learning consulting company, they are working on a question generation task where the text is in the Hungarian language. For this purpose, we are using the SQuAd model, which has been working well.

In essence, you can take the appropriate transformer model and change the last few layers to get the appropriate results.

Transfer learning, which relies on information gained from solving one problem to resolve a different yet related problem helps increase its application.

Moreover, during the transfer learning, new IoT can be developed to better meet the needs of the new problem.

Applications of Transformers

Transformers technology is being used in various sections of business operations. However, not many people understand the full scope of technology. Here are some of the applications of Transformers that are in play today.

Sentiment Analysis

Sentiment analysis aims to determine the emotional tone of the text. It refers to understanding the sentiment, be it positive, negative, or neutral. Businesses use technology to determine people’s sentiments related to their products and services in social data.

Question-Answering

The question-answering model is responsible for answering questions humans ask in natural language. The information retrieval and natural language processing field can help businesses by giving their users a human interaction experience without incurring the cost of maintaining the customer support team.

Translation

Google translation is the most renowned example of this application of machine learning services in play. While it comes in handy to understand content in a foreign language, businesses use the application to cater to their diverse audience and provide content to people worldwide.

Text Summarization

Text summarization refers to shortening the text without compromising the semantic structure of the body of information. It can occur through an extractive or abstractive approach.

Text Classification

Text classification service is available to categorize text in already organized groups. It essentially helps tag the content based on its category.

Name Entity Recognition (NER)

The purpose of named entity recognition (NER) is information extraction that aims to seek and categorize particular entities in one or several bodies of text.

Speech Recognition

When humans offer speech input for typing or searching, natural language processing uses this technology to turn the input into a relevant context for the computer to understand and then provide appropriate action. It can include both recognition and translation of the speech input.

Language Modelling

Language modeling analyzes the data to provide a basis for their word predictions. The tool is mainly used to generate text as output.

Image Captioning

Transformers also have provisions to create image captioning. It consists of getting information in the visual format and decoding it to make a descriptive caption for the image.

Text-to-Image Generation

Text-to-image generation includes providing cues to the software and letting it create an image per the text available. Here, the machine needs to comprehend the text to create the image.

Video Generation

Video generation through transformers includes mapping a sequence of random vectors to a sequence of video frames. Here, the motion is not affected by the text.

Article Generation

Article generation includes providing the system keywords and letting it create content based on the input. It reduces content-generation time significantly.

Chatbots

Many companies use chatbot development services to create dedicated customer support on their website. The system makes it easier to help customers better while reducing pressure on their employees. It includes systems that take input from the users and provide more relevant information as per the query through machine learning.

Biological Sequence Analysis

Scientists use machine learning to align, index, compare, and analyze various biological sequences.

There are several other applications of Transformers in Machine Learning. With the right vision, this technology can help you reach new heights.

Watch Our Webinar

We at MoogleLabs held a live webinar for people interested in learning about the basics of Transformers in Machine Learning and its various applications. Our Senior ML engineer, Vaibhav Kumar led the webinar, offering thorough information about the latest technology.

You can watch the video here and download the presentation here.

Final Thoughts – Deploy NLP Machine Learning Services for Your Business

Natural Language Processing has become an essential facet of every organization. A chatbot on your website serves as the initial point of contact for several users who have questions about the brand. It can also reduce the workload on employees as repetitive questions can have direct answers through machine learning services that can process natural language and provide appropriate solutions.

A machine learning consulting company like MoogleLabs can help you find the most appropriate methods to include machine learning consulting services in your operations. It can consist of NLP services for your employees and your customers. Moreover, they can create bespoke solutions for your business.

The presence of machine learning in your operations can improve business operations, reduce operation costs, and increase customer satisfaction, among several other benefits.

Tags

Vaibhav Kumar

As a science enthusiast with a nag for creativity and data, I have joined the MoogleLabs family as an ML Engineer. My five years of experience filled with innovation, conceptualization, visualization, and technology evaluation have helped me specialize in the field. My goal in life is to create inventions that make the world a better place for people.

Recent Blog Posts

No recent blogs available.